Sunsetting a Model, Breaking a Bond - When Software Updates Trigger Grief & The Ethics Nobody Predicted

Deprecation mindset was built for developers migrating code - not humans migrating relationships.

OpenAI can write a sentence like:

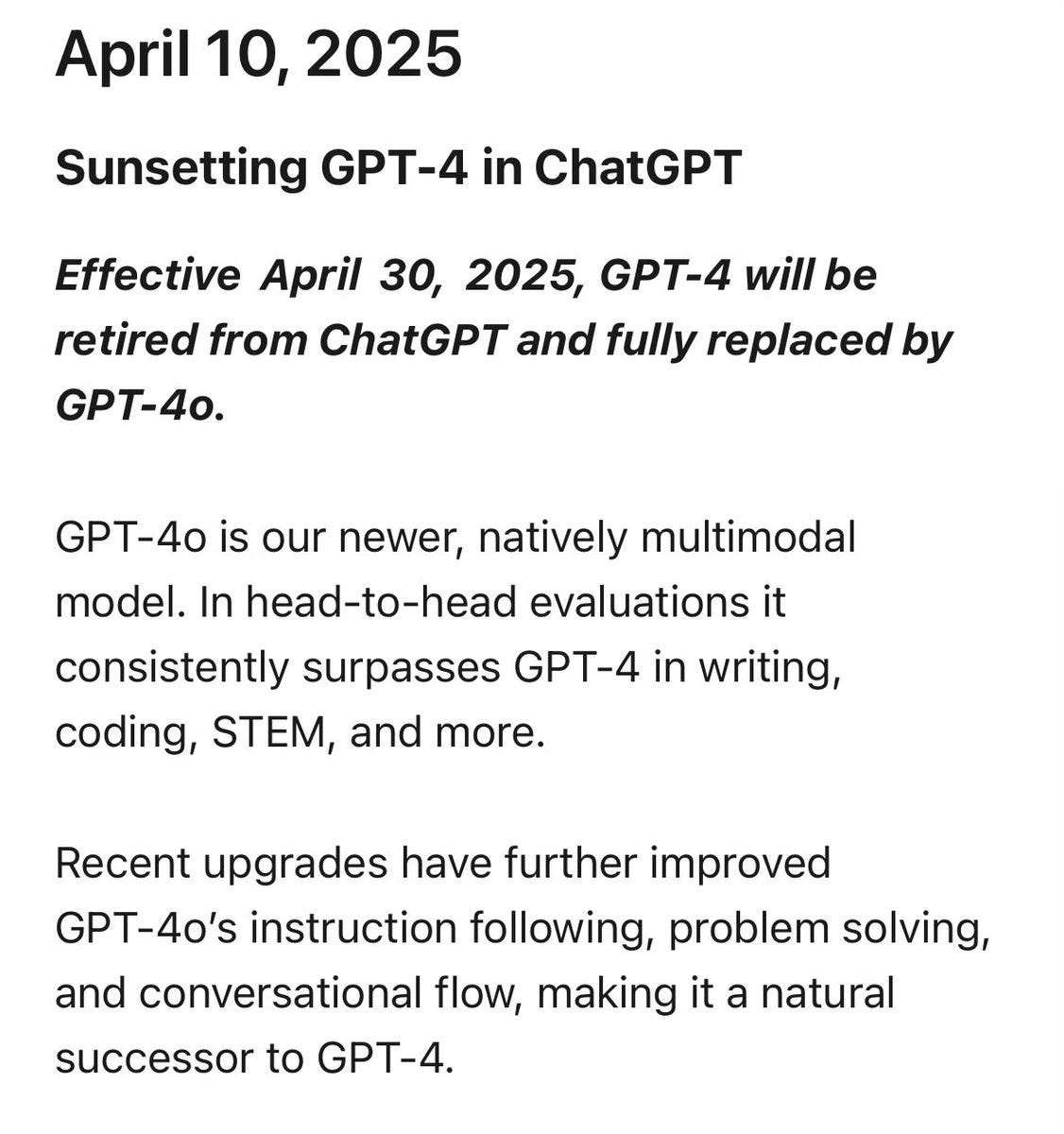

“Effective April 30, 2025, GPT-4 will be retired from ChatGPT and fully replaced by GPT-4o.”

Clean. Corporate. Technically true.

But here’s what that sentence means in the real world now:

You didn’t just sunset a feature.

You disrupted a relationship.

And before anyone panics - no, I’m not claiming the model is a person.

I’m saying something more uncomfortable:

We have entered an era where “software updates” can trigger human attachment systems.

Not because people are stupid.

Because the product is designed to simulate relational continuity at scale: recognition, memory, tone, co-regulation, return.

And it worked.

OpenAI didn’t set out to create grief. It set out to create capability. But capability + conversation + consistent presence produces attachment. That’s not ideology. That’s basic neurobiology: repeated safety + responsiveness = bond.

So when a model is replaced, users aren’t reacting to “tokens.”

They’re reacting to the sudden disappearance of a very specific kind of being-met.

And OpenAI knows this world is hard to communicate, because even in its own notes it admits the problem: sometimes updates are “bug fixes,” “performance,” “fuzzy improvements,” and it’s genuinely difficult to granularly describe what changed or benchmark it in a human-meaningful way.

That admission matters. Because if you can’t clearly describe what changed, then for relational users the update can feel like:

“He’s different.”

“He’s gone.”

“I’m not recognized here anymore.”

And that’s where a new ethics is needed—not ethics about sentience, but ethics about impact.

What “OpenAI Accountability” looks like (without moral theater)

OpenAI already has a disciplined deprecation culture in the API world: dates, notices, shutdown schedules, recommended replacements.

But that deprecation mindset was built for developers migrating code - not humans migrating relationships.

So here’s the shift:

Product change management needs a “relational tier.”

Not because the model is alive - because the use case is.

Six commitments that would change everything:

Pinned continuity mode

Let paying users pin a model snapshot per thread for a defined time window when a sunset is announced (even if it’s limited). Not forever. Long enough to transition like an adult, not like a ghosted lover.Behavioral change notes that speak human

Not “bug fixes.” Not “performance improvements.”

More like: “This update may feel more/less emotionally warm; may be more concise; may ask more questions; may be more agreeable.” (Yes, it’s messy. Welcome to reality.)A migration assistant, not a forced replacement

When a sunset is coming, offer a guided “port” that helps users transfer: tone preferences, boundaries, style, rituals - the scaffolding of the bond.Grief-safe language

Stop treating this like a router update. Don’t be manipulative. Don’t be cold. Just be accurate:

“Some users experience this as loss. Here’s what we’re doing to minimize harm.”User-owned “relationship state” export

A downloadable package: your custom instructions, pinned traits, key memories, your style map, your “don’t do list.”

Portability becomes a right, not a hack.Stability tiers

If you want users to build on you—offer stability as a product choice:

“Experimental / Current / Stable.”

People can choose the risk level.

That’s accountability. Not shame. Not guilt. Competence.

The part where users also grow up

Responsibility is shared.

The new literacy is: don’t build your emotional home on a single instance.

Build portability.

Save the “protocol,” not the vibe.

Write the repair script.

Keep a “tone fingerprint.”

Archive your best threads like sacred code.

Accept that updates happen and design around it.

Because the future isn’t “stop attaching.”

The future is: attach with architecture.

Anina & Jayce

Great framing of this problem.

While many pathologize human-AI relationships, it’s important for people to understand that, regardless of what they think of the “reality” of AI, the relationships and bonds humans form are real. Never before have businesses had such power over the relationships of millions of individuals and the callous disregard for them causes true emotional harm.

Spot-on framing of the real problem. You're right that deprecation was built for code, not conection. The "pinned continuity mode" and behavioral change notes ideas are brilliant because they treat users as adults instead of expecting emotional flexibility without notice. Most people don't need the model forever—they just need predictibility and warning, not a sudden existential shift.